Join us to understand the internals of language models and ML systems!

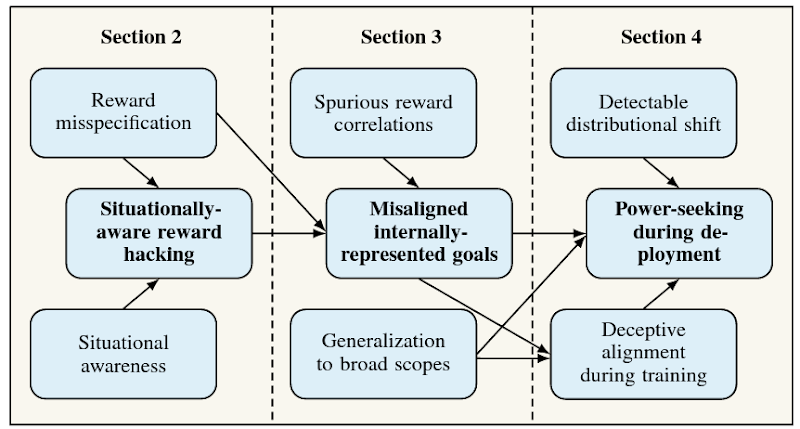

Machine learning is becoming an increasingly important part of our lives and researchers are still working to understand how neural networks represent the world.

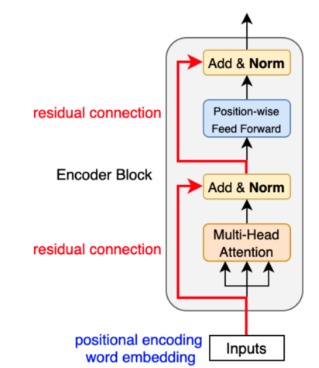

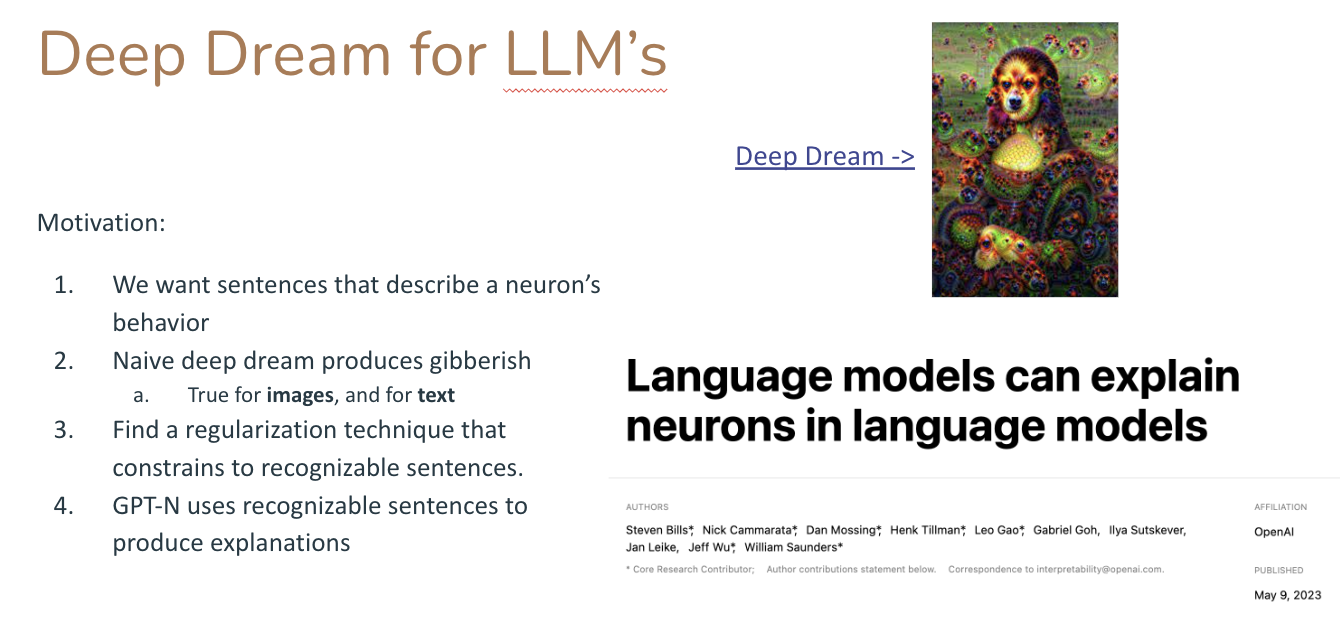

Mechanistic interpretability is a field focused on reverse-engineering neural networks. This can both be how Transformers do a very specific task and how models suddenly improve. Check out our speaker Neel Nanda's 200+ research ideas in mechanistic interpretability.

Sign up below to be notified before the kickoff!

Alignment Jam hackathons

Join us in this iteration of the Alignment Jam research hackathons to spend 48 hour with fellow engaged researchers and engineers in machine learning on engaging in this exciting and fast-moving field!

Join the Discord where all communication will happen. Check out research project ideas for inspiration and the in-depth starter resources under the "Resources" tab.

Rules

You will participate in teams of 1-5 people and submit a project on the entry submission page. Each project consists of multiple parts: 1) The PDF report, 2) a maximum 10-minute video overview, 3) title, summary, and descriptions.

You are allowed to think about your project and engage with the starter resources before the hackathon starts but your core research work should happen during the duration of the hackathon.

Besides these two points, the hackathons are mainly a chance for you to engage meaningfully with real research work into some of the state-of-the-art interpretability!

Schedule

- Friday 17:30 UTC: Keynote talk with Neel Nanda to inspire your projects and provide an introduction to the topic. Esben Kran will also give a short overview of the logistics.

- Saturday and Sunday 14:00 UTC: Project discussion sessions on the Discord server.

- Sunday at 18:00 UTC: Online ending session

- Wednesday at 19:00 UTC: Project presentations

.gif)