[Happening now!] Explore safer AI with fellow researchers and enthusiasts

Large AI models are released nearly every week. We need to find ways to evaluate these models (especially at the complexity of GPT-4) to ensure that they will not have critical failures after deployment, e.g. autonomous power-seeking, biases for unethical behaviors, and other phenomena that arise in deployment (e.g. inverse scaling).

Participate in the Alignment Jam on safety benchmarks to spend a weekend with AI safety researchers to formulate and demonstrate new ideas in measuring the safety of artificially intelligent systems.

Rewatch the keynote talk Alexander Pan above

Sign up below to be notified before the kickoff! Read up on the schedule, see instructions for how to participate, and inspiration below.

Schedule

The schedule will depend on the location you participate from but below you see the international virtual events that anyone can tune into wherever they are.

Friday, June 30th

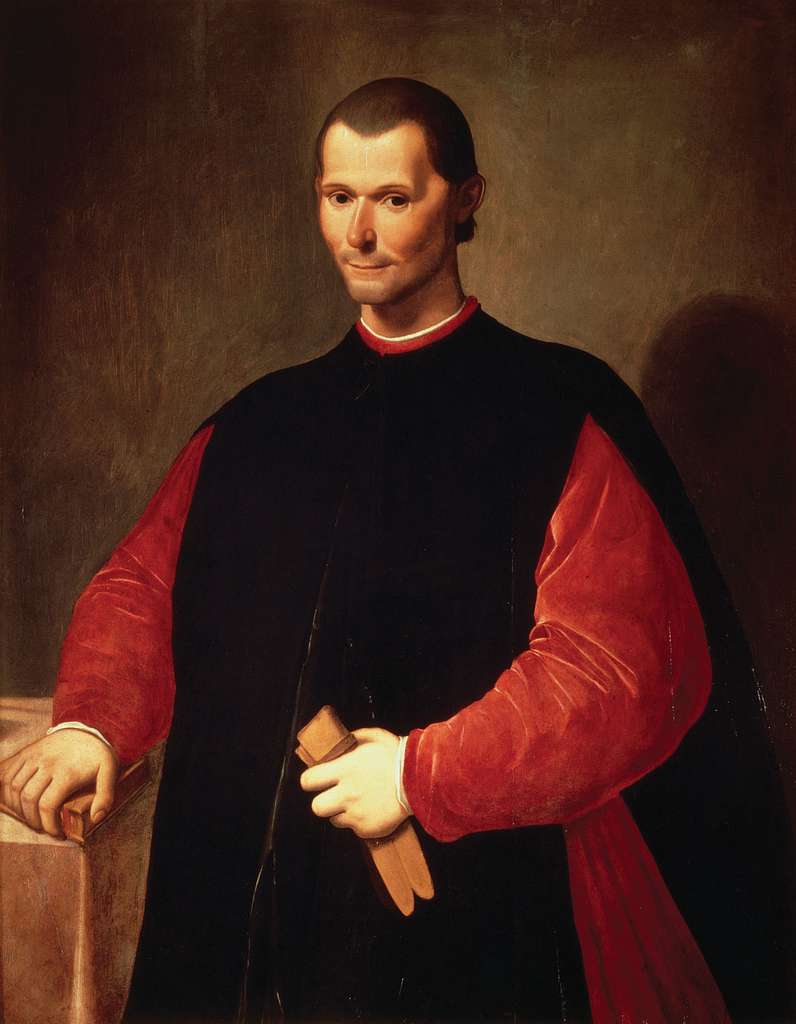

- UTC 17:00: Keynote talk with Alexander Pan, lead author on the MACHIAVELLI benchmark paper along with logistical information from the organizing team

- UTC 18:00: Team formation and coordination

Saturday, July 1st

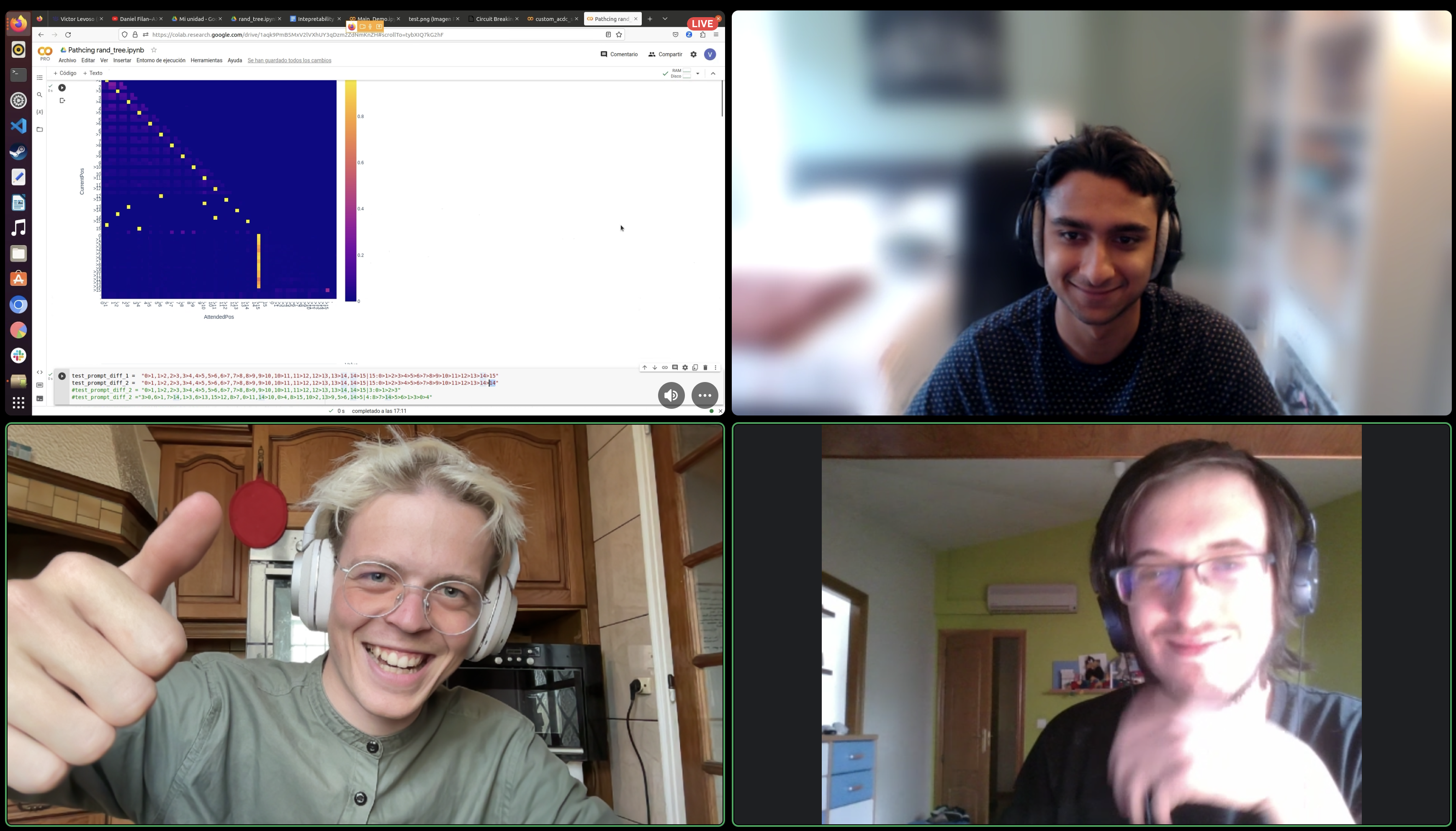

- UTC 14:00: Virtual project discussions

- UTC 17:00: A talk with Antonio Miceli-Barone on "The Larger They Are, the Harder They Fail: Language Models do not Recognize Identifier Swaps in Python" (Twitter thread), an inverse scaling phenomenon in large language models.

Sunday, July 2nd

- UTC 14:00: Virtual project discussions

- UTC 19:00: Online ending session

- Monday UTC 2:00: Submission deadline

Wednesday, July 5th

- UTC 19:00: International project presentations!

Submission details

You are required to submit:

- A PDF report using the template linked on the submission page

- A maximum 10 minute video presenting your findings and results (see inspiration and instructions for how to do this on the submission page)

You are optionally encouraged to submit:

- A slide deck describing your project

- A link to your code

- Any other material you would like to link

Inspiration

Here are a few inspiring papers, talks, and posts about safety benchmarks. See more starter code, articles, and readings under the "Resources" tab.

- Inverse scaling laws competition (round 1 winners, round 2 winners)

- Uncovering limitations of large language model editing

.jpeg)

.png)

.svg)

![[admin] preliminary system test before the weekend starts](https://webflow.com/files/634e78122252d2e2fc3a9ab9/formUploads/f35baa74-ffdc-4a48-a986-65f780b9948e.png)